Motion Control (MPC and RL) of Go2 | RL Engineer

Coming Soon!

Full-Time Problem Solver

Jason Abi Chebli

I develop intelligent motion and autonomy for dynamic robotic systems. By blending classical control with modern AI (RL, Imitation Learning), I enable complex behaviors in legged robots and autonomous vehicles. My projects span the full stack of robotics, from first-principles physics simulations to real-world autonomous exploration and VR-driven digital twins.

Coming Soon!

Developed an autonomous exploration framework for the Unitree Go2 quadruped, integrating Bayesian log-odds mapping and frontier-based planning to enable real-time navigation in unstructured environments.

Developed an imitation learning pipeline for a 7-link humanoid, synthesizing a multi-phase expert trajectory through privileged-DOF kinematic sketching and physics-based refinement to enable a stable cartwheel handstand.

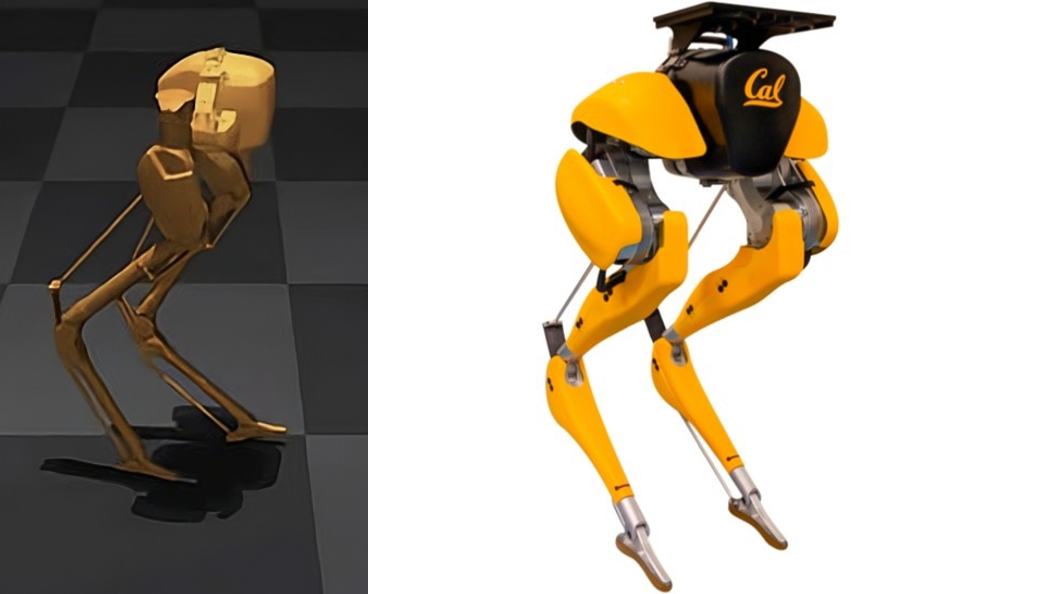

Developed a contact force controller with a PD fallback for the 20-DOF Cassie biped, implementing a QP-based optimizer and dynamic COM trajectory to maintain balance and reject significant external perturbations.

Developed a complete hybrid simulation for a 3-DOF leg, designing a virtual constraint controller to track COM trajectories for stabilization, vertical jumps, and dynamic leaps.

Developed a complete simulation for a bipedal walker from first principles, implementing dynamic modeling, physics-based validation, and trajectory optimization to achieve a stable gait.

Developed a hybrid simulation for a 'compass gait' passive walker, implementing continuous dynamics, discrete impact mapping, and Poincaré map analysis to verify gait stability.

Developed an immersive telerobotic system that enables precise real-time control of a KUKA robotic arm using VR hand-tracking and haptic feedback, enhancing remote manipulation through intuitive user interaction.

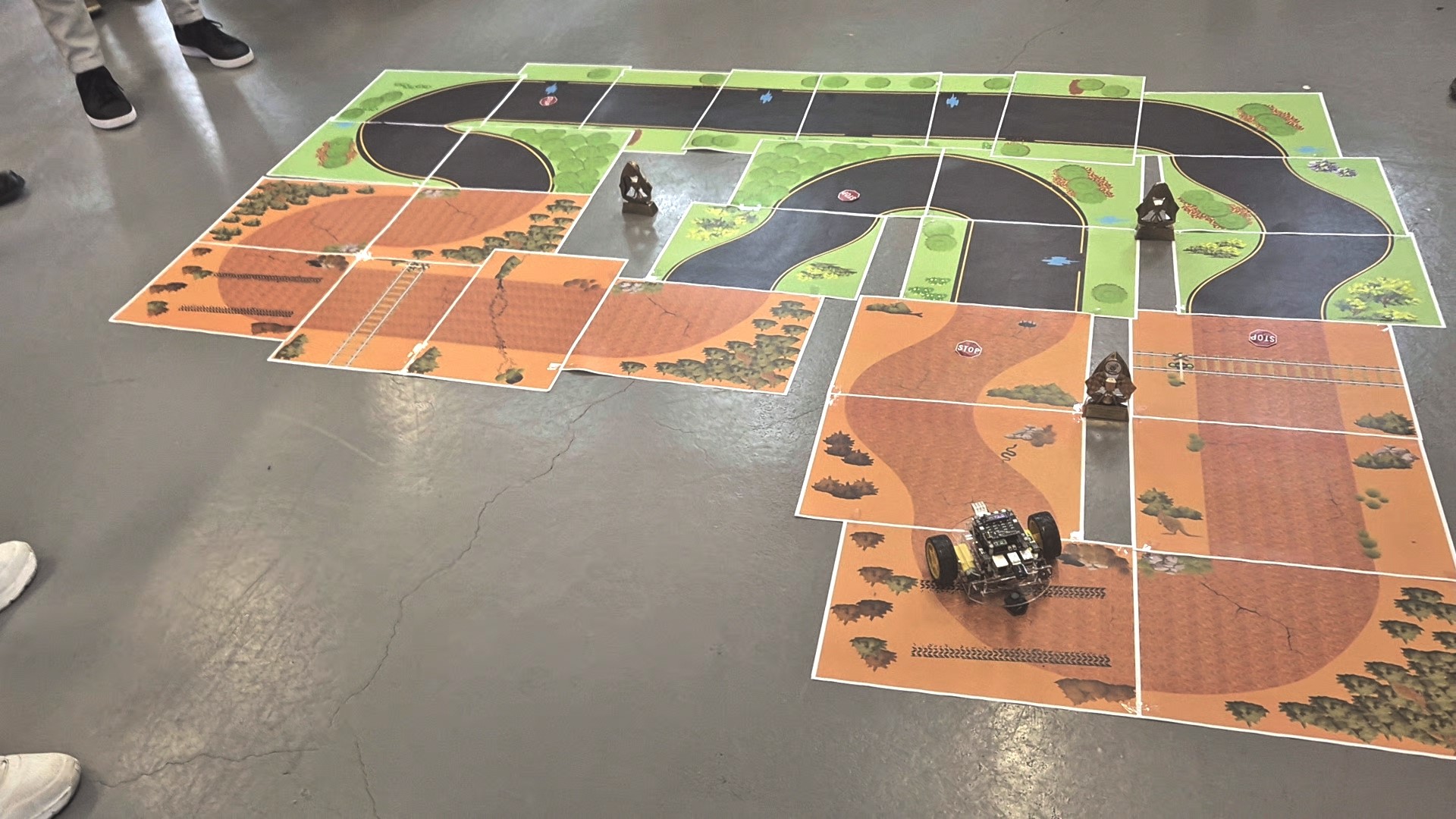

Developed an autonomous delivery robot using a convolutional neural network (CNN) to predict vehicle steering and incorporate color thresholding for stop sign detection, winning 1st place.

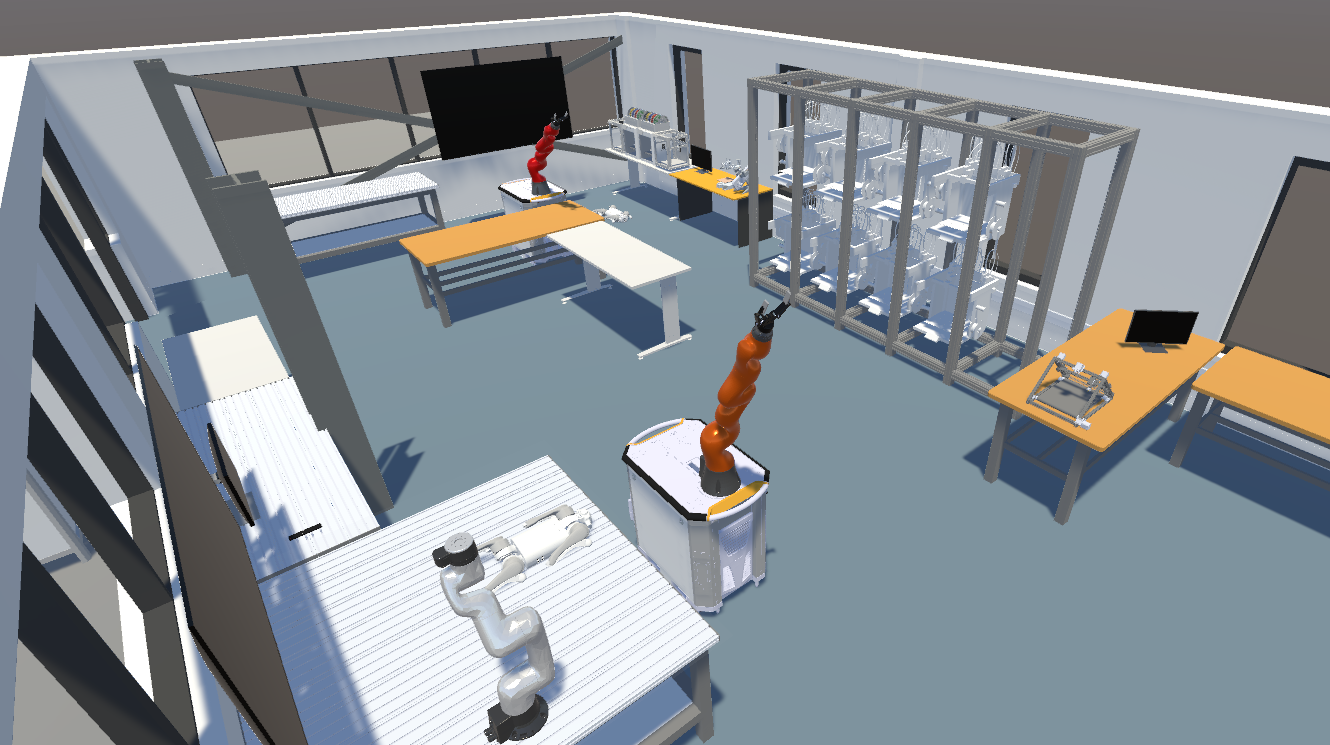

Built and led the transition of the Monash Smart Manufacturing Lab digital twin from Python to Unity, integrating 3D VR, CAD designs, URDF models, and two-way server communication for robot control.

Developed immersive analytics and programming for the Nao humanoid robot, enabling vocal interactions, special tricks, and collaboration with the IT team.